Everything you need to know about Squarespace sitemap.xml files

Does Squarespace create a sitemap?

Squarespace creates a sitemap automatically for you. Every time you create or remove a URL, Squarespace updates your sitemap, so you don’t have to do this manually.

What’s a Squarespace sitemap?

A sitemap (or site map) is like a table of contents of your website. A sitemap contains every page URL to showcase your website structure to search engines. Crawlers understand the website layout by reading the sitemap. It helps them to understand the page and therefore ranks you higher in SERPs (search engine result pages).

Sitemaps have an .XML format and can be viewed as a web address.

Where can I find my sitemap on Squarespace?

You can view your Squarespace sitemap in your browser by adding /sitemap.xml to your website URL:

for live sites:

https://www.yourdomain.com/sitemap.xml

For built-in Squarespace domains:

https://www.sitename.squarespace.com/sitemap.xml

How can I edit my Squarespace sitemap.xml file?

Squarespace creates and updates your sitemap automatically. You can’t access the Squarespace sitemap.xml manually.

If you find any errors in the sitemap you should troubleshoot that all page URLs are correct. If you’ve removed a page or 301 redirected one, the page will not appear in the sitemap anymore.

It can take up to 24 hours until changes are reflected in your sitemap.

Which URLs are included in your Squarespace sitemap?

Squarespace sitemaps include all live URLs and image metadata.

It doesn’t include any file URLs, password-protected, draft, or removed pages. Even pages that 301 redirect to another URL are not included in the sitemap.xml file. All pages on your site with a noindex tag are also excluded from the xml file.

Why does my Squarespace site not appear in Google search results?

It’s normal for new sites to not rank immediately in Google search results. It may take up to a couple of months depending on your content and competition. Anyways, you can potentially speed up the process with a few tricks to inform Google about your new, awesome Squarespace site.

Even if you have an established website, it may take a couple of days for new blog posts or URLs to be indexed by Google.

Request indexing by submitting your Squarespace sitemap to Google Search Console

Does Squarespace submit a sitemap to Google?

Sitemaps are automatically crawled by search engines, however, to speed up the indexing you should submit your Squarespace sitemap to Google via Google Search Console.

If you haven’t verified your site in Google Search Console, you need to do this first.

You can also request Google to crawl your site content for a single URL. This is recommended when you made major updates to one page or added a page that isn’t ranking yet. (don’t overuse this feature)

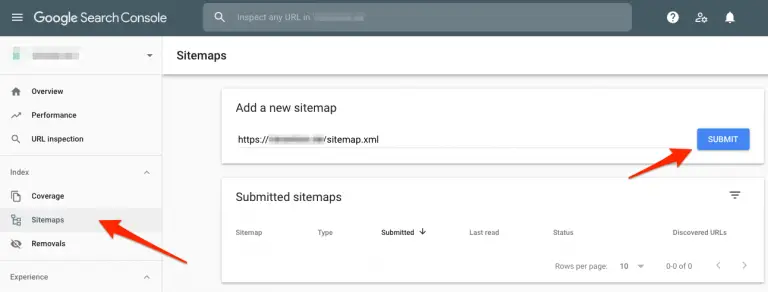

How do I submit my Squarespace site to Google Search Console?

In your Google Search Console account, navigate to Sitemaps in the Index section on the sidebar menu. Insert the sitemap URL and press submit to index your site:

Please note, this helps your ranking but doesn’t ensure that your site gets indexed right away and will appear in Google search results. Especially new websites or new articles are often affected by Google Sandbox Effect.

Common sitemap SEO errors

Sitemaps are great to enhance your SEO results by helping search engines to understand your site structure. However, some issues can cause On-Site SEO errors and negatively impact your overall site health. Error messages can be seen in your SEO tools and Google Search Console.

Here are a few common sitemap SEO mistakes:

Orphaned sites: An orphaned site is a URL that hasn’t any internal links. It’s only discoverable through the direct link URL, but no other page on your website links to the destination. This signals to Google an error in the site structure as well as that the URL isn’t an important page.

Multiple sitemaps on the site: This issue shouldn’t occur to Squarespace users due to their creation and updates of the XML sitemap.

Sitemap.xml not indicated in robots.txt file: In a nutshell, robots.txt files tell search engines which pages on your website they should crawl and which not. The difference between a sitemap.xml and robots.txt files is that sitemaps provide search engines with direct URLs and the structure of the site so Google and Co. can easier understand and read the page. Robots.txt files on the other hand include and exclude which pages should be crawled.

Why should you indicate the sitemap.xml in the robots.txt file

By adding the sitemap.xml to your robots text file, you tell Google and other crawlers to specifically look at the sitemap.xml.

If you have both: a sitemap and robots file on your website, it’s recommended to include the sitemap in the robots.txt to further help search engines to understand your website.

How do I add my Squarespace sitemap.xml to my robots.txt file?

Squarespace adds your sitemap.xml automatically to your robots.txt file. Similar to the sitemap.xml file, you can’t access and edit the robots.txt file.

Where can I find the robots.txt file in Squarespace?

Add /robots.txt to your primary domain URL to view your robots text file.

For live sites:

https://www.yourdomain.com/robots.txt

For built-in Squarespace domains:

https://www.sitename.squarespace.com/robots.txt

Sascha is a Lifecycle Marketing Consultant with over 8 years of digital marketing experiences in Silicon Valley, the UK, and Germany.

After leading the demand generation for a 100+ million company, he decided to venture out on himself. He’s now helping clients to attract and convert more leads and customers.

His main focus are SEO, paid media & marketing automation – all with the focus to tie marketing campaigns to revenue.

Sascha has been featured in industry publications.